One of the traditional divisions in the study of ethics is that between moral philosophy, which studies questions of right and wrong, and moral psychology, which studies the way we think about right and wrong. But as the latter is ultimately grounded in the physical structures of the brain, there is also another field: moral neurophysiology. The most famous example from this field is the "natural experiment" of Phineas Gage, a man who suffered an abrupt change in his personality (and moral sensibilities) after having a three foot long iron bar stuck through his head. Previously regarded as well-balanced, he became

fitful, irreverent, indulging at times in the grossest profanity (which was not previously his custom), manifesting but little deference for his fellows, impatient of restraint or advice when it conflicts with his desires

More recently, scientists have begun probing the physical basis of moral reasoning by sticking people in MRIs or wiring them up to sensors while presenting them with moral dilemmas, in an effort to determine which parts of the brain are active. The results of this are quite interesting [PDF]. Moral philosophy is traditionally strongly Rationalist; both Kantianism and Utiliatarianism are an attempt to derive morality from first principles - in the former case the Categorical Imperative, and in the latter "the greatest good for the greatest number". Emotion is considered a distraction. But when you look at how the brain works, it turns out that emotion plays a significant role in moral reasoning. The authors of the paper linked above reinterpret the tension between the two great ethical systems as reflecting the tension between two parts of our brains: our basic social and emotional responses inherited from our primate ancestors, and our more recently developed facility for abstract reasoning. There's also a comment that Kantian ethics turns out not to be based on "pure practical reason", but on rationalised emotional response (a kind of abstracted "wisdom of repugnance", though with broader and better targets than Kass's revolting little work).

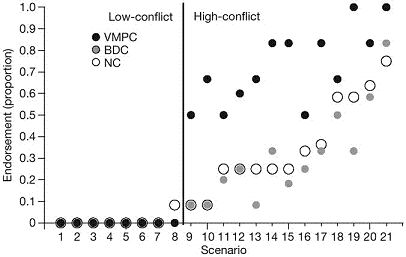

Which gets me round to the subject of my post: an article in Nature entitled "Damage to the prefrontal cortex increases utilitarian moral judgements" [PDF; if you want the graphs you'll need to get hardcopy or hit the databases]. Following the "natural experiment" paradigm, the authors collected a group of people with damage to a specific area of the brain responsible for social emotions, and fed them a series of moral dilemmas [PDF]. The result was a statistically significant increase in "utilitarian" judgements compared to the control groups in "high conflict" scenarios:

(The target group is that labelled "VMPC"; the others are control groups. X-axis scenario numbers do not correspond to those in the file linked above; the Y-axis is the proportion of those saying "yes" to the utilitarian position).

The authors of the paper interpret this as consistent with both emotion and reason being required to produce normal moral judgements, and as showing that the specific area of the brain under study (the ventromedial prefrontal cortex) is a critical component in the emotional aspect of moral reasoning.

None of this of course tells us anything at all about what is good - it only tells us what is, not what ought to be. But it does tell us something very interesting about ourselves and the roots of our moral disagreements, and (if we believe that "ought implies can") the shape that moral theories should take if they are not to demand the impossible.

9 comments:

Neat.

Posted by Lyndon : 5/09/2007 05:18:00 PM

So utilitarians have brain damage. As if proof were needed.

Posted by Richard : 5/09/2007 05:43:00 PM

Very interesting.

Is there somewhere we can see what scenarios in the supplementaries matched what scenarios in the chart?

I need to convince my wife that I'm not a psychopath :~)

Posted by Anonymous : 5/09/2007 08:05:00 PM

ummmm its "categorical"

Obviously, both Kant and Mills would have something to say about this - but I'm not quite sure what.

Posted by Anonymous : 5/09/2007 08:38:00 PM

Richard: No they don't, any more than Kantians do. What it tells us is that this piece of the brain is important in providing emotional input to moral reasoning, that is all.

Mark: I didn't see one, sorry (though you can obviously tell the low-conflict ones, as they're marked as such). You could always do the test for your wife and see if she likes the answers.

Macro: I'm not sure what they'd say about it either - and to be honest, I don't even know if they'd care. One obvious answer to humanity not conforming to your axiomatic theories is to say "so much the worse for humanity" (economists are the obvious example here). If ethics is purely normative, then it doesn't matter how we're wired - we simply have to do the best we can (but see above about "ought implies can"). OTOH if you think that moral theories should in some sense capture our moral intuitions (as both Kant and Mill/Bentham obviously do in important ways, while failing in others), it's a challenge to design better ones.

IMHO one of the benefits of normative ethical theories is that they challenge our moral intutions and force us to ask "is it really good?" This sort of challenge has been valuable, and has led to a lot of moral progress in the past couple of centuries. So clearly description isn't sufficient by itself (and obviously, we could still ask "is it really good?")

Posted by Idiot/Savant : 5/09/2007 10:39:00 PM

I thoroughly enjoyed the discussions in Malcolm Gladwell's book "Blink" around the importance of correctly-functioning emotional responses in generating healthy decisions.

He offers plenty of examples of specific cases where damage to various areas of the brain has produced individuals with responses which are fully rational but fundamentally useless (eg a guy who would go through endless agonising over choosing an appointment time because he had no emotional response towards any particular time and therefore the choice became entirely a rational one).

Plus the book is more emotionally pleasurable to read than rational scientific studies ;-)

Posted by Anonymous : 5/10/2007 05:55:00 AM

Idiot,

Regards philosophy, you're ignoring a few major strands of contemporary thought. Kantians and utilitarians worry a lot about moral choices: others worried more about moral behaviour.

You should read more of the Aristotelian virtue-based ethics. Dewey went along similar lines (character-based). Martha Nussbaum is the best modern writier on this stuff.

Aristotle focusses on behaving well to establish good habits of behaviour - and to avoid Akrasia (weak-willedness) which is a primary cause if immorality.

But Martha Nussbaum is the philosopher who seems most relevant here. Nussbaum has written on the notion of moral vision: the importance not of the results of moral judgement but of the preliminary step of looking at a scene and realising that there is a moral question that needs to be considered.

Posted by Mr Wiggles : 5/10/2007 04:36:00 PM

Maybe further experimentation is called for.

We could choose a random sample of kiwiblog and sir humphreys commentators, strap them down at a keyboard and start them blogging. Then slice the tops of their heads off and begin removing their brain tissue in the [alleged] south-east asian style. The change in their political attitude could be scientifically analysed.

Would these result in important psychological observations? - who cares!!

Posted by Rich : 5/10/2007 04:52:00 PM

"IMHO one of the benefits of normative ethical theories is that they challenge our moral intutions and force us to ask "is it really good?" This sort of challenge has been valuable, and has led to a lot of moral progress in the past couple of centuries. So clearly description isn't sufficient by itself (and obviously, we could still ask "is it really good?") - Yes I agree!

And as Sean points out there are almost more philosophical positions on this than you can shake a stick at (hmmmmm - maybe not the best use of words considering the current child beating climate - but a good example of what you are referring to!)

Posted by Anonymous : 5/12/2007 09:01:00 PM

Post a Comment

(Anonymous comments are enabled).